Cutting over Let's Encrypt's Statistics to Map/Reduce

Published 2017-07-10

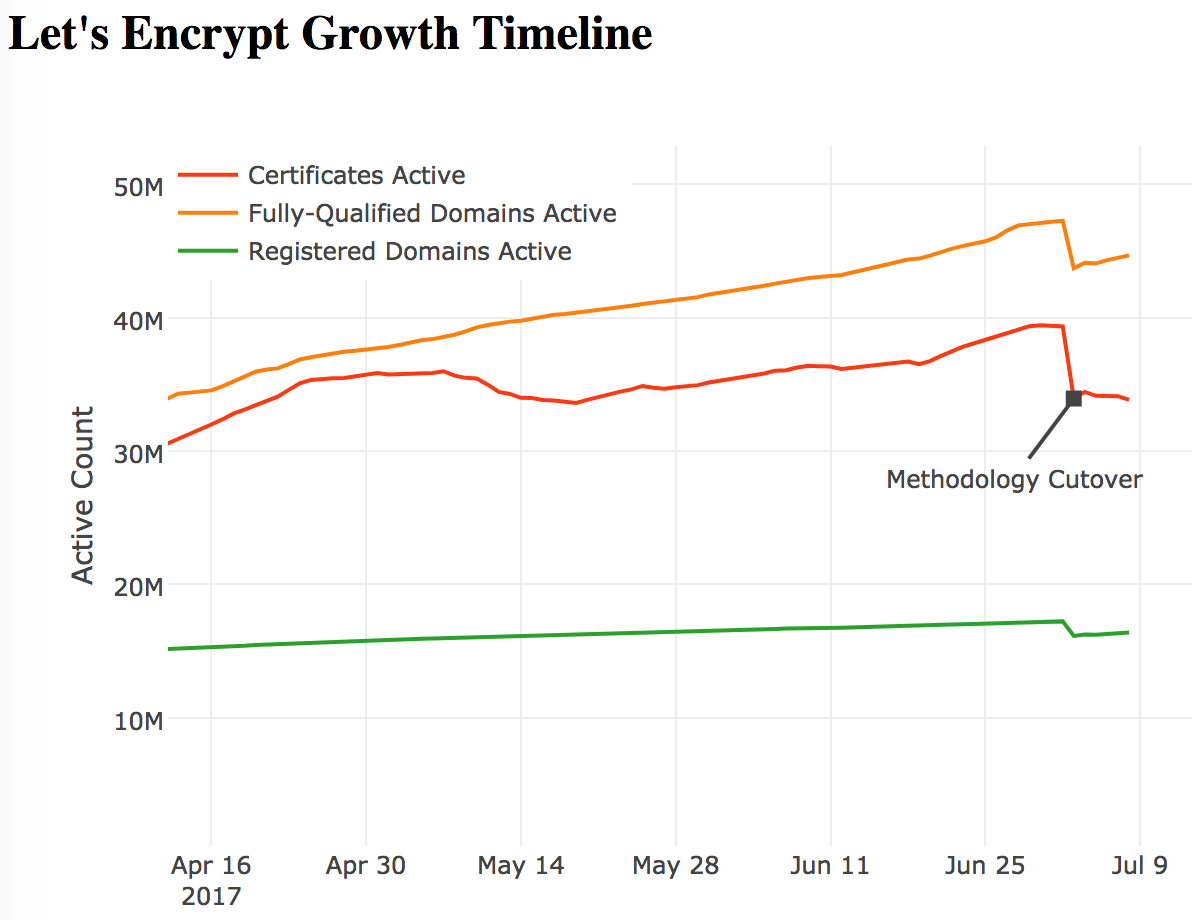

We’re changing the methodology used to calculate the Let’s Encrypt Statistics page, primarily to better cope with the growth of Let’s Encrypt. Over the past several months it’s become clear that the existing methodology is less accurate than we had expected, over-counting the number of websites using Let’s Encrypt, and the number of active certificates. The new methodology is more easily spot-checked, and thus, we believe, is more accurate.

We’re planning to soon cut-over all data in the Let’s Encrypt statistics dataset used for the graphs, and use the new and more accurate data from 3 July 2017 onward. Because of this the data and graphs will show that between 2 and 3 July the count of Active Certificates will fall ~14%, and the count of Registered Domains and Fully-Qualified Domain Names each also fall by ~7%.

You can preview the new graphs at https://ct.tacticalsecret.com/, as well as look at the old and new datasets, and a diff.

Shifting Methodology

These days I volunteer to process the Certificate Transparency logs for Let’s Encrypt’s statistics.

Previously, I used a tool to process Certificate Transparency logs and insert metadata into a SQL database, and then made queries against that SQL database to derive all of the statistics we display and use for Let’s Encrypt’s growth. As the database size has gotten larger, it has been increasingly expensive to maintain. The SQL methodology wasn’t intended for long-term statistics, but as with most established infrastructure, it was difficult to carve out the time needed to revamp it.

The revamp is finally ready, using a Map/Reduce approach that can scale much further than a SQL database.

Why did the old way overcount?

Some of the domain overcounting appears to have been due to domains issued SAN-certificates sometimes not being purged when those certificates expire without being renewed. This only happens in cases where the domains are part of a SAN cert, and then the SAN cert is re-issued with a somewhat different set of domains. Those removed, while expired, were still counted. It appears that this seeming-edge case happened quite a lot for some hosting providers.

The active certificate overcounting is in-part due to timing of new certificates being added during nightly maintenance being essentially double-counted. Jacob pointed out that if Let’s Encrypt had average issuance, for every hour maintenance takes, the active certificate count would inflate by ~5%. Maintenance with the SQL code took between 1 and 4 hours to complete each night, so this could easily account for the discrepancy in the active certificate count.

There are likely other more subtle counting errors, too.

How do we know it’s better now?

The nature of the new Map/Reduce effort produces discrete lists of domains for each issuance day, which are more easily inspected for debugging, so I feel more confident in it. These domain lists are also available as (huge) datasets (which I should move to S3 for performance reasons) at https://ct.tacticalsecret.com/. Line counts in the “FQDN” and “RegDom” lists should match the figures for the most recent day’s entry. At least, so far they have…

Reprocessing historical logs?

It’s technically possible to re-process the historical data in Certificate Transparency for Let’s Encrypt to ensure more accuracy, but I’ve not yet decided whether I will do this. All the data and software is public, so others could perform this effort, if desired.

Technical Details

The SQL effort moved around through 2016 from various hosting providers to get the best deal on RAM to keep the growing database in check, ultimately moving to Amazon’s RDS last winter. A single db.r3.large RDS instance is handling the size well, but is quite expensive for this use case.

The new Map/Reduce effort is currently on a single Amazon m4.xlarge EC2 instance with 150 GB of disk space to hold the 90 days of raw CT log information, the daily summary files, and the 90-day summaries that populate the statistics. This EC2 instance only needs to run about 2 hours a day to catch-up on Certificate Transparency, and then produce the resulting data set. When it needs to scale upward again, I’ll likely move to an Apache Spark cluster.

We’ll see how fast Let’s Encrypt needs it. :)

(Also posted at https://community.letsencrypt.org/t/adjustments-to-the-lets-encrypt-statistics-methodology/37866)